Semi-Supervised Forecasting and Anomaly Detection: Integrating Deep-Learning Techniques into Trisul Network Monitoring

As networks have grown significantly more sophisticated over the past decade, anomaly detection in network datasets now plays a key security role in identifying unwanted (and possibly harmful) behaviour during early stages. Over the past three months as a Machine Learning Engineer Intern here at Unleash Networks, I’ve researched methods to leverage the power of deep-learning techniques in two core components of Trisul—real-time traffic forecasting and top-k anomaly detection—by developing a variety of models specialized in such tasks, and building their supporting infrastructure from scratch. This post provides a high-level, intuitive overview of some of the cutting-edge technologies that were developed during this research.

Network Traffic Forecasting using XGBoost-LSTM

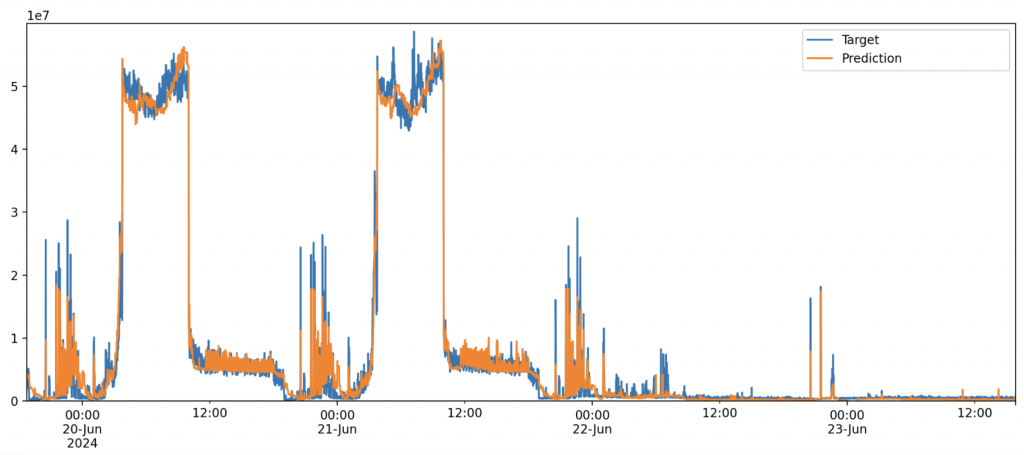

Forecasting is an important fundamental tool in detecting anomalous behaviour in network time-series data. By predicting network behaviour in advance, ground-truth behaviour can be compared to these predictions, and outliers can be isolated accordingly. Such forecasting is akin to weather forecasting—predicting temperature, humidity, wind, precipitation and more factors in advance, with respect to time.

At Trisul, two machine learning models formed the basis of our forecasting research: XGBoost and Long Short-Term Memory (LSTM). Both models perform with vastly different fundamentals. Let’s dive into an overview of how each forecasting model works, and how they can be used in real-world practices.

XGBoost for Network Traffic Forecasting

Initially released in 2014, XGBoost is an open-source algorithm that uses time-series features to generate predictions for a particular timestep. For instance, when asked to predict network traffic on 19/08/2024 18:00, the model may use a combination of the following factors to generate a prediction, based on historical data:

Minute of the HourHour of the DayDay of the WeekWeek of the YearHoliday?Traffic YesterdayTraffic Last WeekTraffic Last Month

The creation of these features is part of data engineering, and a variety of feature sets were experimented with before an optimal set was determined. This is crucial to prediction accuracy, since XGBoost does not use neural networks to generate predictions; instead, it is a boosting algorithm that, at a fundamental level, creates and uses decision trees to forecast. Though this technique may seem less powerful than using deep-learning techniques such as neural networks, on datasets with high periodicity (or a cyclic nature), XGBoost far outperforms nearly every modern forecasting model. Additionally, in our research, XGBoost is not adversely affected by divergence issues when predicting on larger windows (such as predicting a month in advance), unlike neural networks, and is comparatively optimized for lightweight yet powerful performance.

Long Short-Term Memory for Network Traffic Forecasting

Long Short-Term Memory (LSTM) is a deep-learning architecture that builds upon Recurrent Neural Networks (RNNs) for longer sequence modeling. Unlike XGBoost, the LSTM does not use time-series features to generate predictions—it utilizes a context window consisting of the past steps of the time-series to determine the next value.

As a result, the model is fundamentally more responsive to short-term variations in traffic patterns, and is significantly more versatile than boosting algorithms such as XGBoost. However, in our research, using LSTM comes with two downsides:

- With a context window of size n, forecasting can only be performed on n consecutive steps before the model begins diverging.

- When trained on large context windows, the model is unable to effectively capture and use information from earlier timesteps to forecast.

Given LSTM is generally significantly more responsive to traffic patterns, though, this allows models to be trained on one dataset and re-applied to different datasets, provided these sets are somewhat similar in their trends. Additionally, LSTM’s dynamic nature matches and often outperforms XGBoost on relatively shorter forecast windows.

Ensemble Modeling: XGBoost-LSTM

In our research, running forecasts on both models in parallel and combining results as an ensemble model provided the most accurate forecasts, for both short and long forecast windows.

An averaging method that prioritizes LSTM forecasts in the shorter windows, whilst primarily using XGBoost for longer forecast windows, was found to be an effective method for combining these two models.

We also experimented with applying various methods from signal processing, such as wavelet transformations, in data engineering—these methods also improved overall performance, especially on highly periodic data.

Top-k Anomaly Detection using Variational Autoencoders (ConvAVAE)

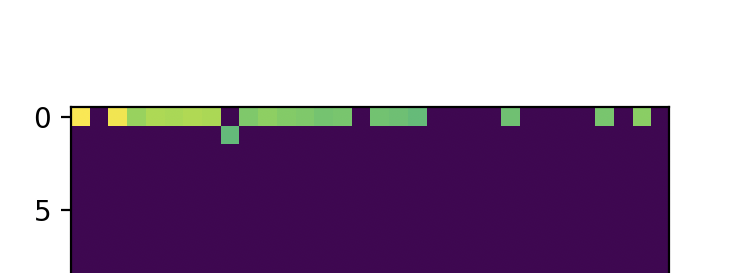

Though established anomaly detection models for time-series (both uni-variate and multi-variate) can be constructed using forecasting methods, several important metrics are also collected in a top-k structure. Research on deep-learning frameworks for top-k data is limited—as a consequence, we have developed a new deep-learning architecture for performant anomaly detection on such data.

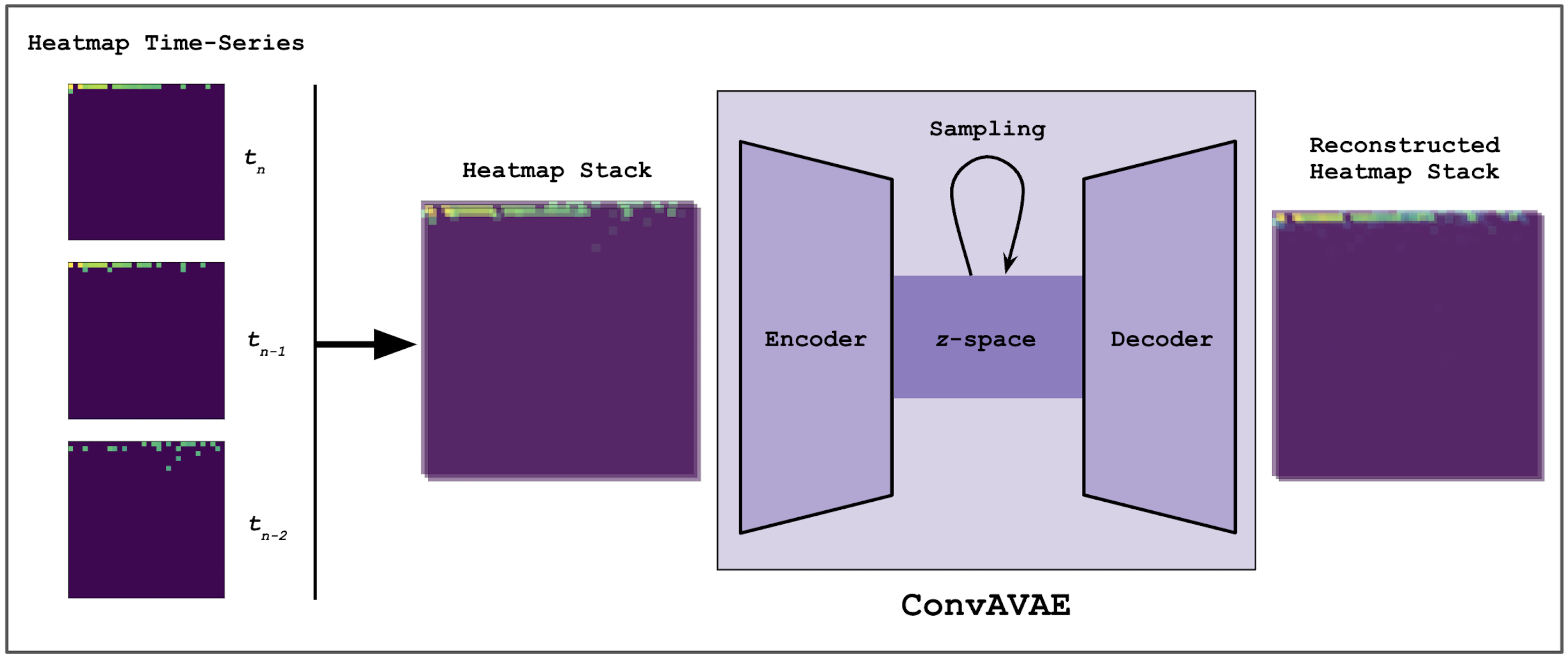

Given the volatility of top-k data, traditional forecasting methods cannot be applied. Furthermore, data on anomalous behaviour is sparse, making high-quality labelled data largely inaccessible. Thus, a semi-supervised approach using custom variational autoencoders (called ConvAVAE here) was developed.

The idea here is to use reconstruction-based anomaly detection, and a great analogy to use is one about reconstructing cat images.

The cat analogy.

Imagine you have a (massive) set of images, mostly comprised with pictures of cats. However, the set contains some images that are not cats—they might be dogs, chickens, tables, mountains, or anything else—that you’d like to identify.

What you can do is train an autoencoder (a model that learns to, given an input image, encode it into a smaller representation, and then reconstruct it from this representation) on your set of (primarily) cats. The difference between the input image and reconstructed image can be calculated mathematically using an error function.

The autoencoder learns to reconstruct cats with high accuracy, and given any image, attempts to generate a cat from it. However, the moment any non-cat—say, a chicken—is passed into the model, based on what it has learnt, it attempts to recover a cat from this image, too.

The error value between the chicken and the (cat-like) reconstructed chicken will be abnormally large—thus, this chicken can be detected as an anomaly.

Using this intuition, we developed a custom variational autoencoder architecture that is capable on working on our structure of data. By converting top-k data into image-like objects, we repurposed technology from state-of-the-art generative AI models to learn to reconstruct these image-like objects.

This novel technology allows us to deliver unparalleled abilities in deep anomaly detection and network monitoring. Running benchmark tests on datasets, the model exceeds in detecting abnormalities efficiently and consistently.

Conclusion

Trisul is working on the forefront of proactive anomaly detection and threat mitigation, enabling swift action to be taken against suspicious behaviour. As a Machine Learning Engineer Intern here, I’ve had the opportunity to implement existing models, as well as research and develop novel architectures, for Trisul’s network analytics. In this blog post, I’ve given an overview of my work—however, deep-learning technologies have the potential to reach wide and improve further, enabling us to tackle tougher industry-wide problems successfully in the near future.

by Aniket Srinivasan Ashok

Machine Learning Engineer Intern @ Unleash Networks